Using SSMs to push sota RL

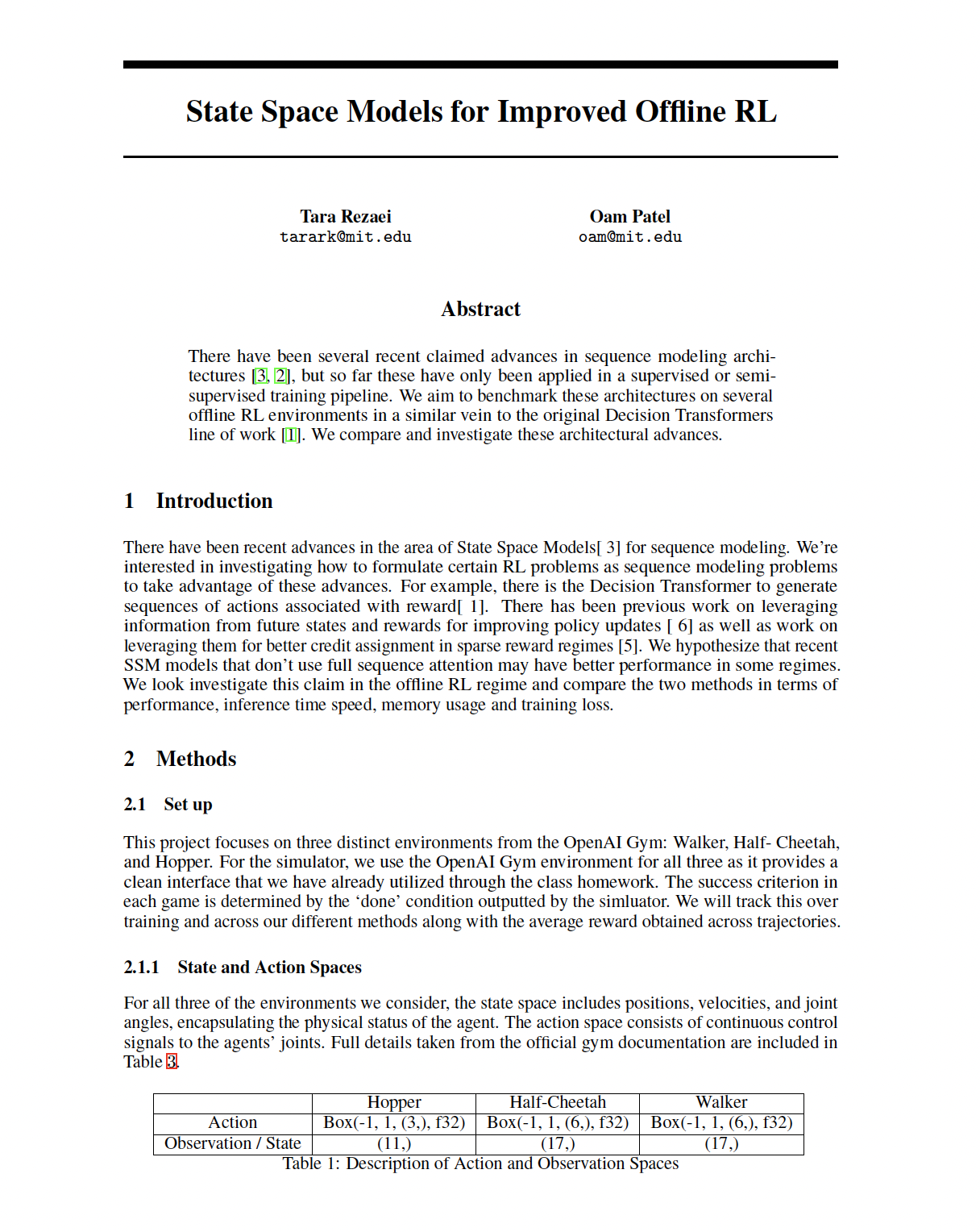

We’ve seen advances on various benchmarks in sequence modeling using state space machines. In our project, Oam and I decided aimed to benchmark this against similar Transformer architecture and specifically in an offline RL setting. This was our final project for 6.8200, Computational Sensorimotor Learning with Prof. Agrawal.

Project Files

For a more detailed description of what we did and our experiments, read the file.